CT No.56: These search results taste stale.

New content quality factors for SEOs (and others!) to consider

Next week Minneapolis heads into fall weather, but I’m going to keep eating peaches and tomatoes for as long as I can.

In this week’s issue:

What makes good content in 2020? It’s not just the entity.

A review of writer portfolio tool Authory

Content tech links of the week

New here? You can

The content intelligence conundrum: We don’t have to wait for an algorithm update to change up SEO strategies

I was hired for my first solely SEO-focused position in 2013, right after Google’s Hummingbird update and just as Google Analytics reduced the amount of organic keyword data to a mere trickle. Hummingbird was the first to incorporate natural language processing into search results, so SEO tactics like keyword stuffing were rendered completely useless.

The Hummingbird update meant that my SEO job wouldn’t be just ramming keywords into places where they didn’t fit, and I was grateful that I had missed the years when content had taken a back seat to algorithmic ranking factors. Hummingbird shook search results up for those who had stuffed their sites with spammy, repetitive content and made room for those with broader vocabularies, more facts and more depth of information on a broad variety of topics.

Much like other major Google algorithm updates like Panda and Penguin, Hummingbird shook up how SEO agencies did business. They started hiring more content specialists like yours truly to create topic-driven editorial plans and focusing less on random link-building or keyword stuffing.

Most of Google’s content-focused algorithm updates since Hummingbird have been pretty lackluster and just tweak the natural language processing to a finer and finer degree. As much as SEO blogs freak out to a comical extent over algorithm updates, Google updates don’t ruffle the feathers of SEO professionals or major brands in 2020 because algorithm updates aren’t a big deal if you have a functioning, high-quality website and a long-term visibility strategy. Even though Google has adopted significantly more machine learning on its back end with RankBrain et al, the types of websites that show up in organic search results are more or less the same as they were after Hummingbird.

The Hubspot strategy: Still treading water in 2020

Because content search optimization best practices have not changed radically since 2013, most SEO-first agencies are executing exactly the same tactics that they were seven years ago.* SEO-focused content strategists have gotten comfortable with the framework of Content pillars > Mid-quality blog content > Incrementally improved traffic. It’s the Hubspot or “content hub” blog strategy, and it still works, sort of, if you’re comfortable with shoving all your content into a blog. But it’s also what literally every other business is doing online.

I’m not saying these tactics are bad or ineffective. Many businesses are still figuring out the role of organic content and traffic in their marketing, especially as consumer tastes have shifted drastically, so a reliable stream of traffic from search is a nice safety net. Too many websites have fields of broken links, redirect chains and other broken pieces that need SEO cleanup. Optimizing titles and headers works, as does adding in well-placed calls to action and structured content markup where relevant. Many, many little optimization points can be tweaked with attention, improving website performance incrementally over time.

Similarly, websites as a whole haven’t changed much since the advent of responsive design and mobile-first thinking. Marketing agencies crank out websites with the same basic templates, cramming all content into a blog or mimicking the no-whitespace-left-unclaimed design of newspaper websites.

The assumption remains: as long as we keep publishing lots of content that performs ok in comparison to the other content on the web, we don’t have to spend as much time thinking about where that content comes from, or how our users feel about that content.

But in addition to the incremental traffic gains and the leads generated, our web content — whether from a brand or a blog or a lifestyle publication — is taking on the characteristics of a permanent digital resource. Instead of simply competing with similar companies to get the best search result, why aren’t we creating more original, trustworthy resources for our businesses as a whole?

I’d challenge content and SEO teams to raise the bar on Good Content again, without an algorithm update, moving beyond talking about eyeballs or conversions, but in publishing and defining information for a larger audience. In the age of rampant misinformation, how can we contribute to a more informed web and create better content resources at the same time?

The state of organic search results in 2020

I haven’t been shy about my frustration with most search results lately. On Google’s side, the algorithm seems to be reducing my more complex queries to basics, subbing in a less specific synonym when I chose a specific word with its own connotation for a reason. When I search, I receive results that are many years old, when most of the time I’d like the most relevant results from the most expert source.

But my most recent aggravation: either when researching a vertical for a client, searching for new software, or just looking up recipes or home topics, Google results are a giant pile of sameness. Every organic search result reads like the same marketing brochure, reiterating the same half-facts, the same pile of words with very little original information.

If you’re outside the SEO industry, you may respond with an answer like “bots are writing the content,” but it’s highly unlikely computers are generating highly ranking content. Or you may dismiss the entire practice of SEO as snake oil or lacking in value because all the search results sound exactly the same, and that’s your jam (but read this first before you dump SEO entirely!).

As I’ve written before, SEO is still viable and search remains a phenomenal innovation, even when the quality of search results is slightly lower than it was around 2016-17. I have a theory that, in the transition from optimizing for individual keywords to optimizing for topics or entities, our obsession with more-content-on-every-topic-quick-now is leading to more mediocre search results. Garbage in, garbage out, as they say.

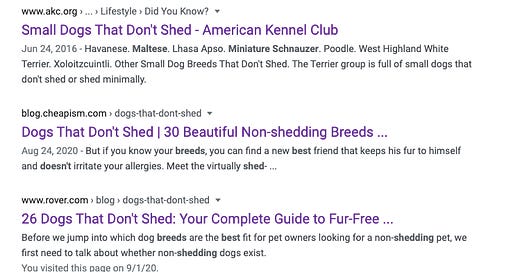

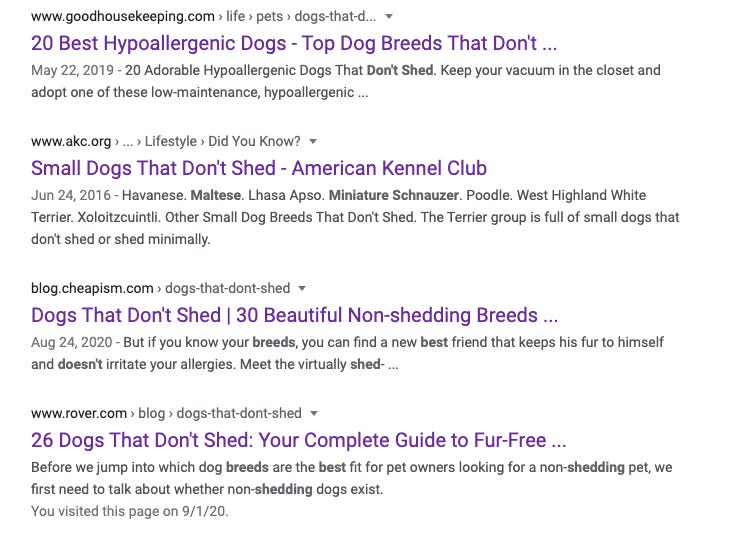

Check out the example of results for “best puppies that don’t shed.”* You’ll notice a striking similarity in all of the results. Every result is more or less the same: a list of photos (or, ugh, a clickthrough slideshow), with a short paragraph about each dog. Each page of content is different from the others, but reading through, you’ll notice a striking amount of sameness in content and design.

*This is an example query and not a personal one. I am a cat person.

All of these pages have been optimized to the fullest extent, based on keyword research and the language that audiences use. Each is full of facts that, if I were to independently verify, are presumably true. They use words specific to the entities “dogs” and “hypoallergenic.” Each page is technically original.

So why the stunning amount of sameness in vocabulary and sentence structure?

Because we’re relying too heavily on pillar-based optimization and not enough on originality and sourcing. It’s the same problem that we had with keyword-specific over-optimization, only now we’re replacing each keyword with its synonyms and related phrases.

What is the impact of entity optimization and content intelligence software?

In previous newsletters, I’ve reviewed content intelligence software, which compiles common keywords around the topic you wish to write about. Content intelligence software crawls top search results and surfaces the most common phrases among all pages that rank for a keyword, using algorithms like TF-IDF to sort the common phrases from the uncommon ones. So, for “hypoallergenic dogs,” you’ll get related phrases like

Chinese crested

Bichon frise

shiny coat

no shedding

These lists of keywords are compiled in briefs and sent to writers, who are then told “use all of these at least once.” Some SEO content optimization software tracks and scores similar keyword usage as you write. So, if you haven’t used the phrase “pet hair” in the “hypoallergenic dogs” article, you’ll be encouraged to do so.

I’m a huge fan of content intelligence software and absolutely think it’s worth the investment, especially if your writers are struggling with SEO. But content intelligence software only identifies similar your content is to other search results — and not how unique it is unto itself.

One reason web content sounds the same is that both content intelligence tools and writers are using the exact same source material: the top 10-20 existing search results. For many SEO bloggers, “I did my research” means that you’ve just looked at the top five search results, maybe added 1-2 more questions or words or a different structure, and then just rewritten the damn thing. (Don’t feel bad about it; I have executed this exact same process many times too.) It’s not plagiarism but it’s not exactly original. The ideas are all the same, top to bottom. It’s as if we’re drawing from the exact same well — which also happens to be the well that machine-generated content is drawing from. It’s a giant echo factory with low-quality information, and it’s contributing in its own little way to the misinformation epidemic.

Google has always warned against duplicate content, acknowledging that you can’t just dupe pages on a website and expect them to rank twice (a very old SEO trick that has been deprecated since the early aughts). But many SEO folks have always taken that to mean “duplicate content on your own website” and not “the exact same words and ideas as every other piece of content about the same subject on every other website.”

Defining “good content” in the misinformation age

Even the most talented writer churning out a blog post in a few hours on a topic is not going to be able to provide perspective on a topic they barely know. And a content intelligence algorithm can only tell you how alike one piece of content is to another piece of content.

(Another reminder: even if you get a “good score” in a piece of optimization software, you have only optimized content for that software’s best guess at what Google thinks is valuable and not Google’s algorithm or user needs. Even the most complex metrics and scores are directional, not absolute.)

The “good content” we all strive for is not just an echo of other search results. Good content takes a truly unique approach to a subject while still using the common words and phrases about that topic.

Good content in 2020:

Is readable and crawlable by search engines

Is well structured according to user behavior and topic matter (i.e., not buried in a blog somewhere)

Contains words and phrases that are characteristic entity you are trying to rank for (i.e., “Chinese crested”)

Also contains words, phrases, and syntax not seen in other similar search results for the query

Presents the topic so that your target audiences can understand, with web-friendly writing

Is factual and originally sourced, with citations

Uses original photos, illustration, design, audio and videos to enhance and complement content

Is accessible for different types of learners — ideally so readers, visual thinkers and video fans can understand the resource at hand

How to optimize content for originality

Admittedly, I haven’t yet done much new research into whether content originality is actually officially a ranking factor that Google uses in its algorithm, but SEO thought leaders are often so mired in nuts-n-bolts algo updates and drooling over the possibilities of machine-learning to talk about originality with the content they’re already creating. But I can tell you based on experience: well-optimized, originally presented content with cited, trustworthy sources always ranks highly when part of an SEO/UX-focused content strategy.

It’s a Chinese crested, of course.

Both Google and users prefer content that looks different from other search results! We can make the web look different, less spammy, by pushing in new directions.

Publishers and content marketers — and SEO software aimed at the two— need to invest in sourcing and originality as part of a high-quality content strategy. This includes:

Fewer, but far higher quality content pieces published each month

Original interviews with subject matter experts

Clear citations and links to original sources where applicable

Comprehensive, longer content that covers (or links to) deep resources for those who want to learn more

Original photos or illustrations (created specifically for your website, i.e., not stock)

Original video with closed captions included

Original data (and not just repeating stats from other sources)

New ideas or perspectives related to a specific topic that haven’t necessarily been represented before in search or magazines or tv (i.e., what your brain is for!

If you’re representing a brand: When selecting SEO providers and writers, brands should ask about how content is sourced and how originality is maintained. Well-researched, original content content takes more time, and you’ll likely be paying more to create less content. Your stakeholders may say, “Well I’ve never seen anything like this before.” And you’ll say, “Yes, that’s how you show up better in search results, attract audiences, and build a brand.”

If you’re an editor or content manager: you will likely need to build in more rounds of revisions. You’ll likely challenge your writers with your demands for originality. You may use phrases like, “Yes, but what’s your idea here?” And you will feel like Don Draper but also a bit like a jerk but also — you’ll find that the outcomes are better on all accounts. (But please do this responsibly and build in time for revisions if you’re going to tell someone to rethink their ideas. It can definitely be a bit of a gut punch if someone criticizes your core idea, so, you know, be kind, respectful and constructive.)

If you already create content that is both original and SEO-optimized: talk more about your research process and your sourcing, and point out how originality could help your client score new wins in organic search.

If you build SEO or content intelligence software: figure out how to include uniqueness, originality and sourcing in your quality assessment algorithms.

In the coming years, the tech industry will try to make AI-generated content happen. For those of us who believe that computers will never have original ideas, and that the ideas we weave into our writing are valuable, we need to optimize our critical thinking muscles a little more adeptly, especially in the content we create.

And in the meantime, let’s focus on making the best content possible with high quality, original information, so we can make the best search results possible.

Did you enjoy this essay? You can

Collecting your digital clippings: Authory review

This week another large Minneapolis-based arts publication, The Walker Reader, is being either retired or reimagined. As the arts publication for the Walker Art Center, the Walker Reader happens to be one of the only publications where I was ever paid as a freelance writer, and my short article about a Kickstarter event remains on the website, hanging out until the next content audit comes around to knock it out. I don’t expect it to stick around through another website redesign; its archival value is minimal, and as an arts institution (and not journalism), I’m sure the Walker has much more important content to preserve, although as long as they have me, I will happily have my words sit on their site.

But in the case that my scribblings are retired forever and redirected to the homepage, I can use a tool like Authory to preserve my content.

Authory at a glance

Authory is a portfolio tool for writers, including full-time journalists, content marketers, or occasional subject matter experts. For a monthly fee, Authory permanently captures the text of all your digital bylines. (That is, as long as Authory is in business.)

As digital-only news outlets fragment and standards for preserving digital information are piecemeal at best, a tool like Authory provides security for those who want to maintain their digital writings in some sort of official, authoritative fashion.

Digital news outlets shouldn’t come and go with the frequency they do — and I hope we find ourself on the path to better ones with sustainable archives. Especially since venture- and PE-backed content publishers can be flippant and digital content strategies change all the time, the permalinks to our content may not be so permanent.

For those of us who write on the marketing side, the bylines are fewer and far between. The trade pub whose content I wrote for an entire year no longer has my byline on the site, and the blog posts I wrote at past agencies have mostly been scrubbed of my identity. All cool — but it would be nice to have all those clippings collected in one place.

Authory operates similarly to professional journalist tool Muck Rack: the tool crawls the outlets that have run your bylines regularly and will update your content automatically. Unlike Muck Rack, though, it’s a paid model that accommodates content from all writers — not just professional journalists. For those of us who have migrated into non-journalism content development — presumably because we have a sense of self-preservation and a desire to earn a sustainable living — Authory is a fine solution for maintaining a portfolio.

(I’m also extremely curious about how rights and ownership come into play with tools like Authory and the overbroad work for hire agreements that brands, agencies and publishers all have as standard in their contract. Like most digital-first aggregation companies, Authory’s terms of service require that you have permission to reprint or publish any articles housed on Authory, so if you upload without permission for purposes of a portfolio, I’d recommend that you keep your visibility private.)

Authory also lets writers

Publish a free automated newsletter for fans, so subscribers are updated whenever you publish something new

Download hard copies (in html or xml) of all of their content

Catalog content from behind paywalls for their own private portfolios

Toggle privacy settings — if you want to share your portfolio with just a few or with the public, your visibility is yours to control

Create collections — related articles, beats you’ve covered, your greatest hits

If you do not want to maintain a portfolio website of your own (and I completely understand this sentiment in 2020, although it’s really not that hard to build an annually updated portfolio on your own), Authory is a solid interim solution.

Note: by writing this review, I may receive a free year of Authory, although I did not know that until after I wrote the review…

Have you used Authory before? Or do you just want to say hi? Here is a new button to

Content tech links of the week

Moz’s research about gender bias in the SEO industry is very good. Moz has always been relatively equitable, but the industry as a whole is definitely bro-y and dismissive of women’s voices, so I’m glad they put this together.

I would love every local newspaper to have an ad-free tier. Here are some local news successes at the Bay Area News Group.

New research on how people use Google search in 2020 from Brian Dean at Backlinko.

I’m not a data scientist, but I understood and enjoyed parts of this explanation of how to remove gender bias from a word vector algorithm.

Turns out I wasn’t wrong, blockchain is just a giant relational table that soaks up energy and not much else. From The Correspondent, whose footnote/citation/callout software is just grrrrrrreat.

If you like this newsletter, you can also

Visit The Content Technologist! About. Ethics. Features Legend. Pricing Legend.